Edge computing is emerging as one of the most transformative trends in the tech world. As data volumes explode and latency becomes a critical factor in user experience, edge computing offers a new paradigm—bringing computation and data storage closer to where data is generated. This shift is reshaping everything from smart devices and autonomous vehicles to industrial IoT and healthcare. In this article, we explore the fundamentals of edge computing, its advantages, use cases, and why it’s poised to redefine the future of cloud infrastructure.

1. What Is Edge Computing?

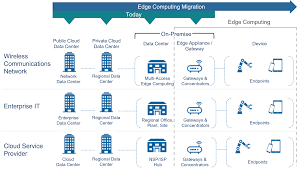

At its core, edge computing refers to the practice of processing data at or near the source of data generation, rather than relying solely on centralized cloud data centers. This means running workloads on edge devices such as IoT sensors, gateways, or local edge servers.

By decentralizing data processing, edge computing reduces the distance data must travel, lowering latency, decreasing bandwidth usage, and enabling real-time analytics.

2. Why Cloud Alone Isn’t Enough

Cloud computing has revolutionized how we store and manage data, but it has limitations, especially for latency-sensitive or bandwidth-intensive applications. As more devices generate data in real time—autonomous vehicles, industrial robots, AR/VR systems—the need for faster response times and reduced network loads becomes critical.

Edge computing complements the cloud by offloading certain tasks to the edge, where immediate decisions or actions are necessary. The result is a hybrid architecture that combines the scalability of the cloud with the speed and efficiency of the edge.

3. Key Benefits of Edge Computing

- Low Latency: By processing data locally, edge computing enables near-instantaneous response times, essential for applications like autonomous driving or remote surgery.

- Reduced Bandwidth Costs: Only relevant or summarized data needs to be sent to the cloud, reducing network congestion and data transfer costs.

- Enhanced Security and Privacy: Local processing minimizes the need to send sensitive data to centralized servers, lowering the risk of interception or breaches.

- Reliability: Edge devices can operate independently of the cloud during outages or network disruptions, ensuring continuous service.

4. Use Cases Across Industries

Edge computing is driving innovation across multiple sectors:

- Manufacturing: Predictive maintenance, quality control, and real-time analytics are optimized through edge-enabled machinery and sensors.

- Healthcare: Real-time patient monitoring, wearable health devices, and point-of-care diagnostics benefit from localized processing.

- Retail: Smart shelves, in-store analytics, and automated checkout systems use edge computing to enhance customer experience.

- Autonomous Vehicles: Self-driving cars require split-second decisions based on vast sensor data—something only edge processing can deliver effectively.

- Smart Cities: Traffic monitoring, surveillance, and public safety systems rely on edge analytics to operate efficiently and respond to events in real time.

5. The Role of 5G in Edge Adoption

5G networks provide the speed and low-latency infrastructure necessary to fully realize edge computing’s potential. With faster data transfer and improved device connectivity, 5G enables more seamless integration between edge devices and central systems.

Together, 5G and edge computing can power real-time applications like augmented reality, immersive gaming, and telemedicine with unprecedented speed and reliability.

6. Challenges to Overcome

Despite its promise, edge computing presents several hurdles:

- Infrastructure Investment: Building and managing distributed edge networks requires substantial investment.

- Data Management: Ensuring data consistency and integrity across thousands of distributed nodes is complex.

- Security: Edge devices can be vulnerable to physical tampering or cyberattacks, demanding robust security protocols.

- Interoperability: Standardizing protocols and ensuring seamless integration with existing cloud systems remain ongoing challenges.

7. Edge and AI: A Powerful Duo

Artificial intelligence at the edge—often called edge AI—is a rapidly growing field. Running AI models locally enables faster inference and real-time decision-making. For example, surveillance cameras with edge AI can detect suspicious behavior and alert authorities instantly, without cloud dependency.

Advances in chip design and lightweight AI frameworks are making it feasible to deploy complex models on resource-constrained edge devices.

8. The Future of Edge Computing

Looking ahead, edge computing will become a critical component of the broader IT ecosystem. We can expect to see:

- Edge-native applications: Software designed specifically to run on decentralized, distributed architectures.

- Autonomous edge systems: Self-managing edge environments capable of orchestration and resource optimization.

- Federated learning: Training AI models across distributed data sources without transferring data to a central location, preserving privacy.

As enterprises prioritize agility, responsiveness, and customer-centric services, edge computing will be instrumental in enabling next-generation digital experiences.

Conclusion

Edge computing is not just an upgrade to cloud—it’s a shift in how we think about data processing, connectivity, and computing architecture. By bringing computation closer to the source of data, it unlocks new possibilities in speed, efficiency, and innovation.

As 5G networks expand and edge devices become more powerful, businesses that embrace this shift will be better positioned to lead in the digital-first future. The edge is not just coming—it’s already here, and it’s transforming the technological landscape as we know it.